Fig. 1. DeepSeek and Global AI Change Infographic, Jeremy Swenson, 2025.

Minneapolis—

DeepSeek, the Chinese artificial intelligence company founded by Liang Wenfeng and backed by High-Flyer, has continued to redefine the AI landscape since the explosive launch of its R1 model in late January 2025. Emerging from a background in quantitative trading and rapidly evolving into a pioneer in open-source LLMs, DeepSeek now stands as a formidable competitor to established systems like OpenAI’s ChatGPT and Microsoft’s proprietary models available on Azure AI. This article provides an expanded analysis of DeepSeek R1’s technical innovations, detailed comparisons with ChatGPT and Microsoft Azure AI offerings, and the broader economic, cybersecurity, and geopolitical implications of its emergence.

Technical Innovations and Architectural Advances:

Novel Training Methodologies DeepSeek R1 leverages a cutting-edge combination of pure reinforcement learning and chain-of-thought prompting to achieve human-like reasoning in tasks such as advanced mathematics and code generation. Unlike traditional LLMs that rely heavily on supervised fine-tuning, DeepSeek’s R1 is engineered to autonomously refine its reasoning steps, resulting in greater clarity and efficiency. In early benchmarking tests, R1 demonstrated the ability to solve multi-step arithmetic problems in approximately three minutes—substantially faster than ChatGPT’s o1 model, which typically required five minutes (Sayegh, 2025).

Cloud Integration and Open-Source Deployment One of R1’s key strengths lies in its open-source availability under an MIT license, a stark contrast to the closed ecosystems of its Western counterparts. Major cloud platforms have rapidly integrated R1: Amazon has deployed it via the Bedrock Marketplace and SageMaker, and Microsoft has incorporated it into its Azure AI Foundry and GitHub model catalog. This wide accessibility not only allows for extensive external scrutiny and customization but also enables enterprises to deploy the model locally, ensuring that sensitive data remains under domestic control (Yun, 2025; Sharma, 2025).

Detailed Comparison with ChatGPT:

Performance and Reasoning Clarity ChatGPT’s o1 model has been widely recognized for its robust reasoning capabilities; however, its closed-source nature limits transparency. In direct comparisons, DeepSeek R1 has shown parity—and in some cases superiority—with respect to reasoning clarity. Independent tests by developers indicate that R1’s intermediate reasoning steps are more comprehensible, facilitating easier debugging and iterative query refinement. For example, in complex multi-step problem-solving scenarios, R1 not only delivered correct solutions more rapidly but also provided detailed, human-like explanations of its thought process (Sayegh, 2025).

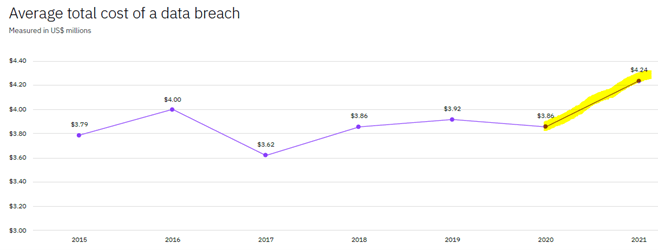

Cost Efficiency and Accessibility While premium access to ChatGPT’s capabilities can cost users upwards of $200 per month, DeepSeek R1 offers its advanced functionalities free of charge. This dramatic reduction in cost is achieved through efficient use of computational resources. DeepSeek reportedly trained R1 using only 2,048 Nvidia H800 GPUs at an estimated cost of $5.6 million—an expenditure that is a fraction of the resources typically required by U.S. competitors (Waters, 2025). Such cost efficiency democratizes access to high-performance AI, providing significant advantages for startups, academic institutions, and small businesses.

Detailed Comparison with Microsoft Azure AI:

Integration with Enterprise Platforms Microsoft has long been a leader in providing enterprise-grade AI solutions via Azure AI. Recently, Microsoft integrated DeepSeek R1 into its Azure AI Foundry, offering customers an additional open-source option that complements its proprietary models. This integration allows organizations to leverage R1’s powerful reasoning capabilities while enjoying the benefits of Azure’s robust security, compliance, and scalability. Unlike some closed-source models that require extensive licensing fees, R1’s open-access nature under Azure enables organizations to tailor the model to their specific needs, maintaining data sovereignty and reducing operational costs (Sharma, 2025).

Performance in Real-World Applications In practical applications, users on Azure have reported that DeepSeek R1 not only matches but sometimes exceeds the performance of traditional models in complex reasoning and mathematical problem-solving tasks. By deploying R1 locally via Azure, enterprises can ensure that sensitive computations are performed in-house, thereby addressing critical data privacy concerns. This localized approach is particularly valuable in regulated industries, where strict data governance is paramount (FT, 2025).

Market Reactions and Economic Implications:

Immediate Market Response and Stock Volatility The initial launch of DeepSeek R1 triggered a significant market reaction, most notably an 18% plunge in Nvidia’s stock as investors reassessed the cost structures underlying AI development. The disruption led to a combined market value wipeout of nearly $1 trillion across tech stocks, reflecting widespread concern over the implications of achieving top-tier AI performance with significantly lower computational expenditure (Waters, 2025).

Long-Term Investment Perspectives Despite the short-term volatility, many analysts view the current market corrections as a temporary disruption and a potential buying opportunity. The cost-efficient and open-source nature of R1 is expected to drive broader adoption of advanced AI technologies across various industries, ultimately spurring innovation and generating new revenue streams. Major U.S. technology firms, in response, are accelerating initiatives like the Stargate Project to bolster domestic AI infrastructure and maintain global competitiveness (FT, 2025).

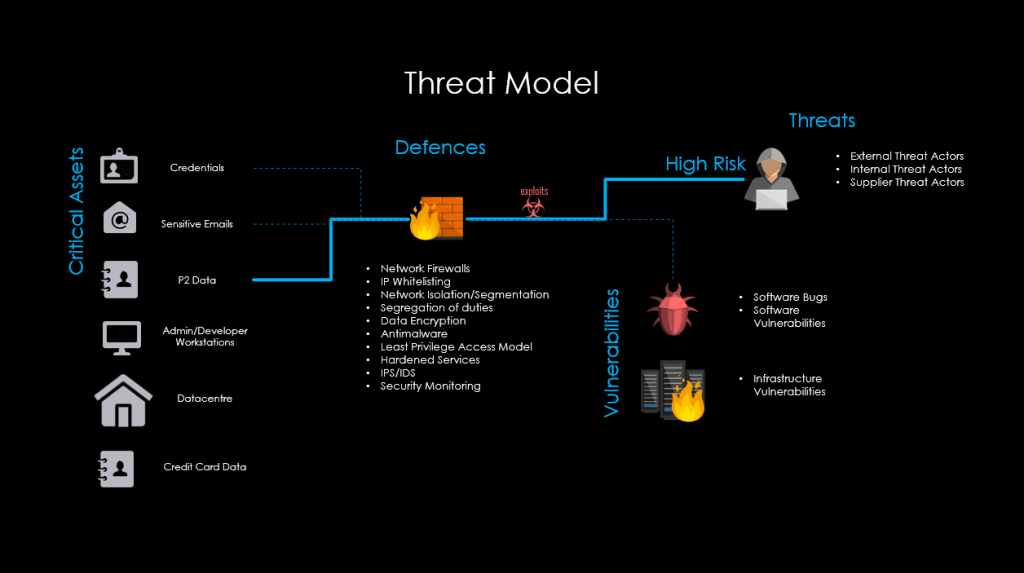

Cybersecurity, Data Privacy, and Regulatory Reactions:

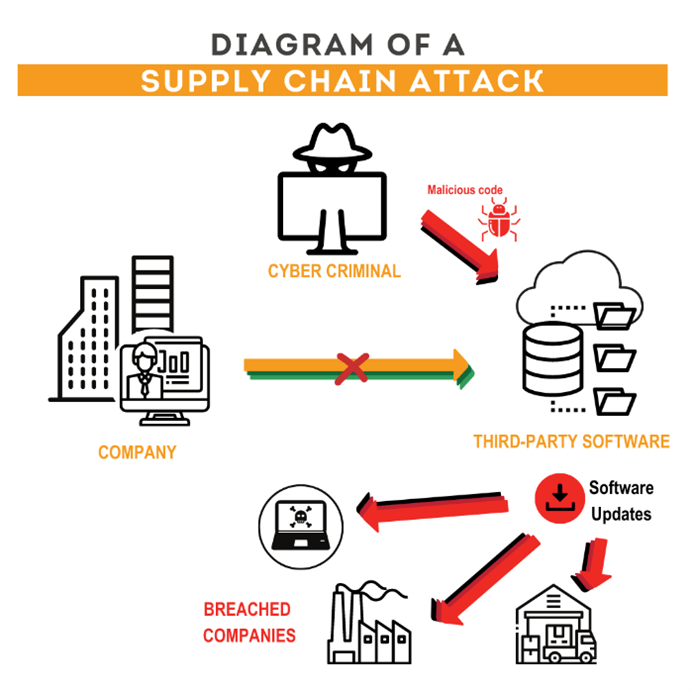

Governmental Bans and Regulatory Scrutiny DeepSeek’s practice of storing user data on servers in China and its adherence to local censorship policies have raised significant cybersecurity and privacy concerns. In response, U.S. lawmakers have proposed bipartisan legislation to ban DeepSeek’s software on government devices. Similar regulatory actions have been taken in Australia, South Korea, and Canada, reflecting a global trend of caution toward technologies with potential national security risks (Scroxton, 2025).

Security Vulnerabilities and Red-Teaming Results Independent cybersecurity tests have revealed that R1 is more prone to generating insecure code and harmful outputs compared to some Western models. These findings have prompted calls for more rigorous red-teaming and continuous monitoring to ensure that the model can be safely deployed at scale. The vulnerabilities underscore the necessity for both DeepSeek and its adopters to implement robust safety protocols to mitigate potential misuse (Agarwal, 2025).

Geopolitical and Strategic Implications:

Challenging U.S. AI Dominance DeepSeek R1’s emergence is a clear signal that high-performance AI can be developed without the massive resource investments traditionally associated with U.S. models. This development challenges the long-standing assumption of American technological supremacy and has prompted a strategic reevaluation among U.S. policymakers and industry leaders. In response, initiatives such as Microsoft’s Stargate Project are being accelerated to ensure that the U.S. maintains its competitive edge in the global AI arena (Karaian & Rennison, 2025).

Localized AI Ecosystems and Data Sovereignty To mitigate cybersecurity risks, several U.S. companies are now repackaging R1 for localized deployment. By ensuring that sensitive data remains on domestic servers, these firms are not only addressing privacy concerns but also paving the way for the creation of robust, localized AI ecosystems. This trend could ultimately reshape global data governance practices and alter the balance of technological power between the U.S. and China (von Werra, 2025).

Conclusion and Future Outlook:

DeepSeek R1 represents a watershed moment in the global AI race. Its technical innovations, cost efficiency, and open-source approach challenge entrenched assumptions about the necessity of massive compute power and proprietary control. In direct comparisons with systems like ChatGPT’s o1 and Microsoft’s Azure AI offerings, R1 demonstrates superior transparency and operational speed, while also offering unprecedented accessibility. Despite ongoing cybersecurity and regulatory challenges, the disruptive impact of R1 is catalyzing a broader realignment in AI development strategies. As both U.S. and Chinese technology ecosystems adapt to these shifts, the future of AI appears poised for a more democratized, competitively diverse, and strategically complex evolution.

About The Author:

Jeremy A. Swenson is a disruptive-thinking security entrepreneur, futurist/researcher, and seasoned senior management tech risk and digital strategy consultant. He is a frequent speaker, published writer, podcaster, and even does some pro bono consulting in these areas. He holds a certificate in Media Technology from Oxford University’s Media Policy Summer Institute, an MSST (Master of Science in Security Technologies) degree from the University of Minnesota’s Technological Leadership Institute, an MBA from Saint Mary’s University of Minnesota, and a BA in political science from the University of Wisconsin Eau Claire. He is an alum of the Federal Reserve Secure Payment Task Force, the Crystal, Robbinsdale, and New Hope Community Police Academy (MN), and the Minneapolis FBI Citizens Academy. You can follow him on LinkedIn and Twitter.

References:

- Yun, C. (2025, January 30). DeepSeek-R1 models now available on AWS. Amazon Web Services Blog. Retrieved February 8, 2025, from https://aws.amazon.com/blogs/aws/deepseek-r1-models-now-available-on-aws/

- Sharma, A. (2025, January 29). DeepSeek R1 is now available on Azure AI Foundry and GitHub. Microsoft Azure Blog. Retrieved February 8, 2025, from https://azure.microsoft.com/en-us/blog/deepseek-r1-is-now-available-on-azure-ai-foundry-and-github/

- Waters, J. K. (2025, January 28). Nvidia plunges 18% and tech stocks slide as China’s DeepSeek spooks investors. Business Insider Markets. Retrieved February 8, 2025, from https://markets.businessinsider.com/news/stocks/nvidia-tech-stocks-deepseek-ai-race-nasdaq-2025-1

- Scroxton, A. (2025, February 7). US lawmakers move to ban DeepSeek AI tool. ComputerWeekly. Retrieved February 8, 2025, from https://www.computerweekly.com/news/366619153/US-lawmakers-move-to-ban-DeepSeek-AI-tool

- FT. (2025, January 28). The global AI race: Is China catching up to the US? Financial Times. Retrieved February 8, 2025, from https://www.ft.com/content/0e8d6f24-6d45-4de0-b209-8f2130341bae

- Agarwal, S. (2025, January 31). DeepSeek-R1 AI Model 11x more likely to generate harmful content, security research finds. Globe Newswire. Retrieved February 8, 2025, from https://www.globenewswire.com/news-release/2025/01/31/3018811/0/en/DeepSeek-R1-AI-Model-11x-More-Likely-to-Generate-Harmful-Content-Security-Research-Finds.html

- Karaian, J., & Rennison, J. (2025, January 28). The day DeepSeek turned tech and Wall Street upside down. The Wall Street Journal. Retrieved February 8, 2025, from https://www.wsj.com/finance/stocks/the-day-deepseek-turned-tech-and-wall-street-upside-down-f2a70b69

- von Werra, L. (2025, January 31). The race to reproduce DeepSeek’s market-breaking AI has begun. Business Insider. Retrieved February 8, 2025, from https://www.businessinsider.com/deepseek-r1-open-source-replicate-ai-west-china-hugging-face-2025-1

- Sayegh, E. (2025, January 27). DeepSeek is bad for Silicon Valley. But it might be great for you. Vox. Retrieved February 8, 2025, from https://www.vox.com/technology/397330/deepseek-openai-chatgpt-gemini-nvidia-china